STORY #7

Chairs, Designed by AI with Its “Imagination”

Ikuko Nishikawa

Professor, College of Information Science and Engineering

AI learns a “style” through big data.

It was 2015 when an amazing technique was demonstrated in a paper, which turns any photograph or picture into a piece like Picasso or van Gogh. We can now enjoy such an application on a smartphone.

Ikuko Nishikawa, studying machine learning and optimization, is one of the researchers interested in this “painting style transfer” by a convolutional neural network. In particular, she focuses on working with corporations and designers to apply this style transfer to some practical use.

Firstly, a neural network is a computing model simulating a biological neural network of the brain. In addition to tremendous improvement of the computer performance in processing time and resources, the availability of a huge amount of data on the internet has accelerated the recent development of this computing model. Deep neural networks obtained by learning a huge amount of data play a key role in the present artificial intelligence (AI).

She explains the learning mechanism as follows. A neural network is designed to change its structure until it acquires the desired ability, for example, a desired classification of the given image data. To recognize a cat on an image, a large amount of image data is input to it, and only when there is a cat, it is demanded to answer so correctly. If the answer is incorrect, the network is slightly modified, in a direction not to make the same mistake when the same image is shown the next time. Simply repeat this over and over, without any hint on any feature of the cat. In parallel, the same procedures are run for other objects to be recognized. The initial network can only answer randomly. By the modification as a feedback to a wrong answer, it begins to discover the hints to classify the images correctly, based only on whether the answer is correct or not. After repeating this trial-and-error processes, the network gradually finds effective hints such as the presence of a certain shape or color as a key element for classification into a cat and other classes. Taking a shape as an example, it starts with the simplest element, a line segment. Next, the network combines simple elements into slightly more complex figures and coloring. In a layered network, the process to extract elements (filtering, or convolution) is repeated on every single layer, and by combining them, the network gradually extracts more abstract objects as faces and coats of hair. And it ultimately identifies whether a given image possesses the enough characteristics of a cat, which leads to the answer.

“‘Painting style transfer’ is to combine ‘style’ features and ‘content’ features separately extracted from different photographs or painters’ work,” she says. Content means the shape and the position of objects on an image, while style is a set of characteristics unique to an image other than content such as coloring, patterns, textures, and feelings, e.g. “like van Gogh.” For the style transfer, a deep neural network is used to extract the style features from the model image such as the above famous painter’s work. Then, with the content of any choice, the “transferred” image is generated by pixel-by-pixel continuous modifications until its style becomes the same as the targeting style.

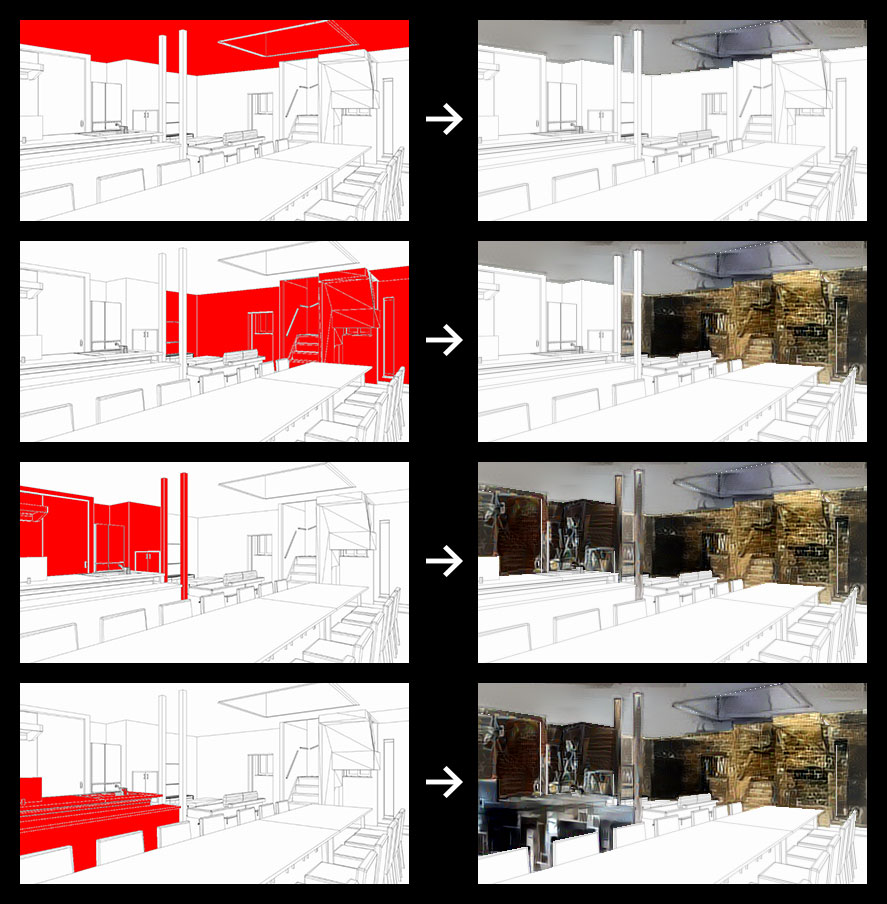

She proposed a local style transfer, which divides an image into multiple regions and obtains the style of each region, for the application in the joint research with art designers and housing manufacturers. “By transferring the style of photographs of house interior such as resort-style or New York-style onto a line drawing of another house interior perspective, anyone can simulate an interior image for a new house,” she says. Using the above local style transfer, each style can be transferred only to specific areas such as living room furniture or walls in an interior perspective drawing. The user can try and choose any different photographs to generate the style for furniture, interiors, floor material, and other fittings, individually. By improving the transfer algorithm for the detail requirements in each application, she says, “It would be great to freely create images that even users did not come up with on their own.”

Simulating an interior image by transferring various styles of photographs onto different areas in an interior perspective drawing.

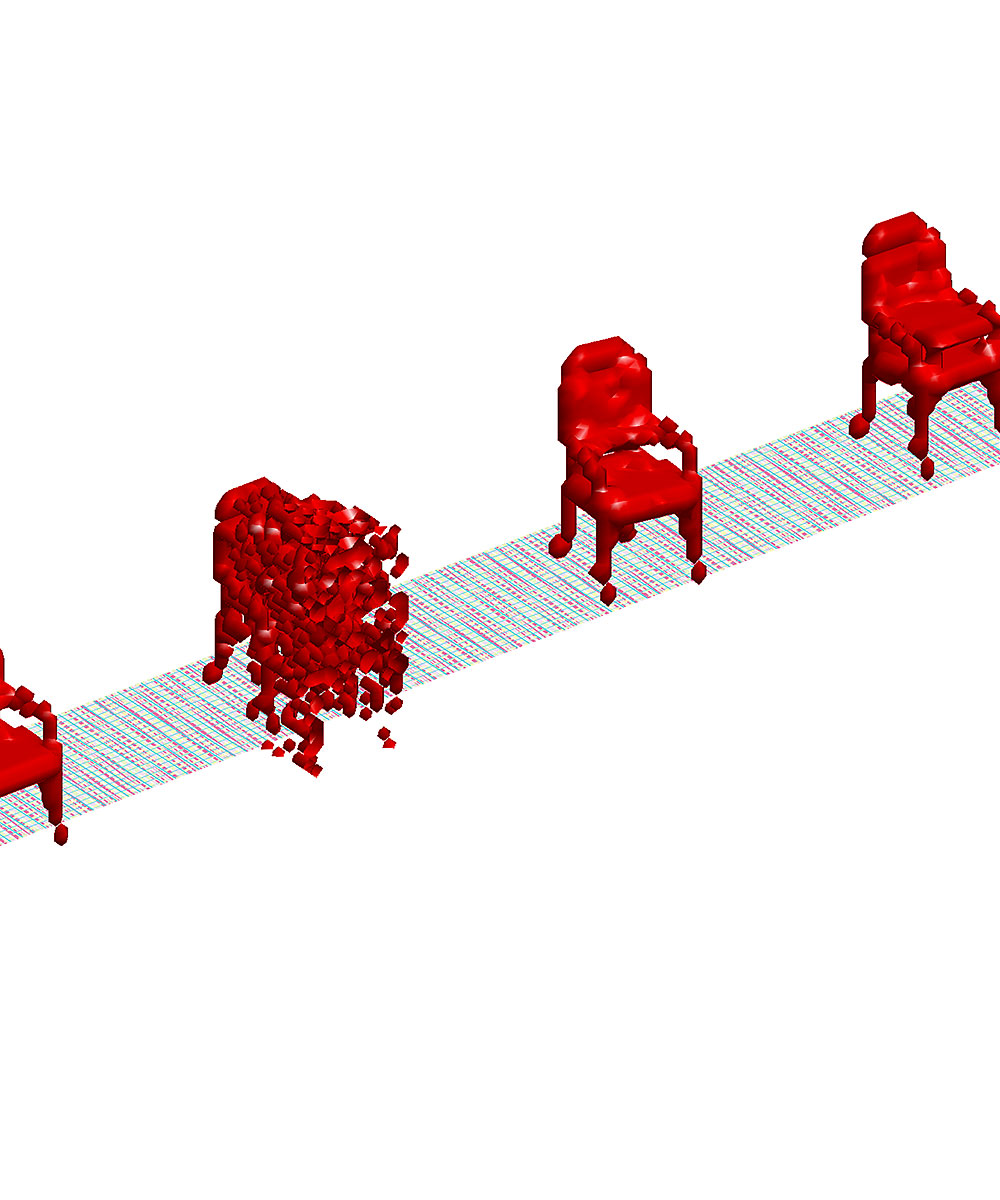

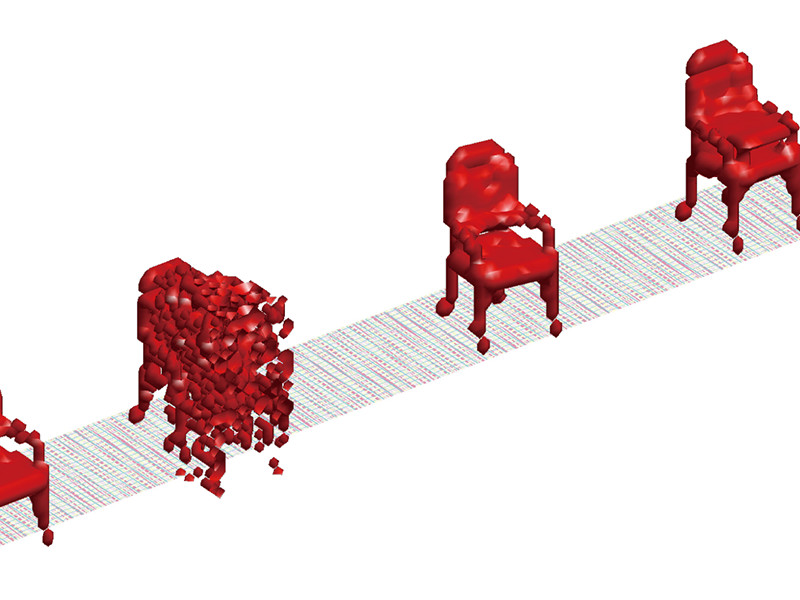

She is also studying an algorithm to interpolate 3D object data using a deep neural network. “When people look at a chair from behind with some missing part and recognize it as a chair, they may imagine a whole chair by interpolating an invisible part of a seat or legs. They use their past experience with chairs for this. This can be realized with machine learning,” she says. 3D chair data with various shapes is fed into the network, and it learns what shape is called chair as a combination of multiple levels of features and its distribution. After the learning, once the network recognizes a given object as a chair, it can interpolate for the missing parts and generate a complete shape of a whole chair even if it was never shown before. “If we apply this generation ability to, for example, a product design, we can input an incomplete design to get a complete one, or input an improper design to get a corrected one.”

Deep neural networks have been utilized to develop AI, and they will be applied to more diverse fields. The researchers like Nishikawa will become more important, as they could make a bridge to bring these advanced systems into various fields like business, science, and even art.

When a large number of 3D data of chairs in various shapes is fed into the deep neural network for the learning of “what a chair is (in a shape),” the network acquires the data distribution of the chair’s shape as its internal representation. When this is completed, it can recognize a chair that hasn’t been given before based on the internal representation. At the same time, once it recognizes a given object as a chair, it generates a 3D chair model which matches the internal representation to interpolate for any missing parts in the data. By repeating the cycle of recognition and generation from the data of the backs of chairs again and again, the network gradually forms something like a whole chair. In the 15th cycle, it will recognize the chair it generated as a chair and output it as a chair consistent with the visible rear part. The result of the first round of generation is shown on the left page, while the 15th round is shown in the left side of the right page. These are chairs that AI created by using its imagination.

- Ikuko Nishikawa

- Professor, College of Information Science and Engineering

- Subjects of research: (1) Machine learning: Neural networks, pattern recognition.; (2) system optimization: logistics, power transactions, structural design, VLSI design, etc.; (3) bio-informatics: estimation of post-translational modification sites of human protein; classification of brain activity data, circuit models and the simulation of insect brains, etc.

- Research keywords: Computational intelligence, machine learning, optimization, bioinformatics