STORY #4

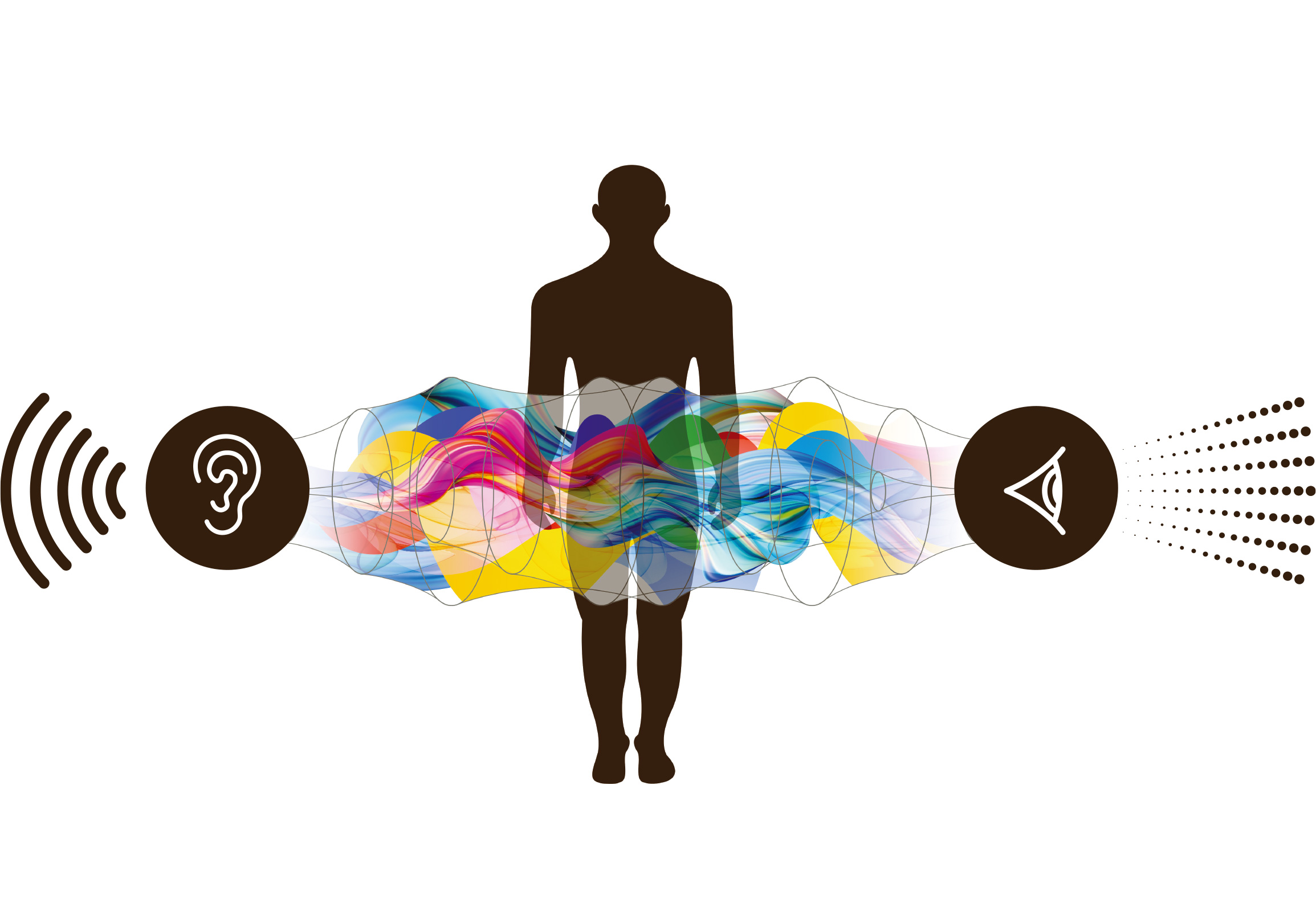

Sound changes perception

of movements

and vision

Masayoshi Nagai, Ph.D.

Professor, College of Comprehensive Psychology

Daiki Yamasaki, Ph.D.

Project Researcher, Research Organization of Open Innovation & Collaboration; JSPS Research Fellow, Japan Society for the Promotion of Science

Yusuke Suzuki

Doctor’s program student, Graduate School of Human Science; JSPS Doctoral Course Student (DC), Japan Society for the Promotion of Science

Exploring the intriguing relationship

among perception, cognition,

and the body

Shot-putters shout as they put their shots. It has been reported in the field of sport psychology that it helps to exert great force when one grunts. Human perception and the cognitive system have an intriguing relationship with the body.

Professor Masayoshi Nagai, College of Comprehensive Psychology, is interested in how human information processing systems, such as perception and cognition, could be modulated on bodily states, emotions, or communication with others. One such study was about people with autistic traits and the degree of their stepping-cycle synchronicity. “Have you ever found yourself in situations where you were automatically synchronizing the strides of someone walking beside you? It is known that behavior and emotions tend to spread between people (through mimicry) and cause unconscious synchronization. However, those who have higher autistic traits hardly ever synchronize their steps with others,” says Nagai. Furthermore, Nagai examined how the extent of movements influences the creative thought process and found that the larger the movement, the greater was divergent creativity. Besides, he has also pointed out the varying relationships among perception, cognition, and muscle response or vocalization, such as how physical movements become greater with louder vocalization.

In recent years, Nagai has extended his research to our auditory senses. In recent studies, Nagai and his student Yusuke Suzuki have been exploring the relationship between sensory information and vocal characteristics. “Non-arbitrary systematic association across perceptual stimuli, attributes, or dimensions from different sensory modalities are called crossmodal correspondence. Many studies have been conducted on this. For example, auditory pitch has been shown to correspond with a wide variety of perceptual attributes. However, no research has focused on crossmodal correspondence between perceptual attributes (especially visual attributes) and the vocal characteristics that are performed as motor response,” explains Suzuki. Nagai and Suzuki conducted experiments to determine whether correspondence occurs between visual spatial high/low position and high/low pitch of vocal response.

In the experiment, participants were asked to vocalize a vowel [a] with high pitch when a visual stimulus appeared higher on the screen and with low pitch when it appeared lower on the screen under compatible condition. Conversely, participants were asked to vocalize with low pitch when a visual stimulus appeared higher on the screen and with high pitch when it appeared lower on the screen under incompatible condition. “We compared the onset of vocalization (the reaction time) between conditions, and showed that the reaction time was shorter under compatible condition than that of incompatible condition. These results indicated that the representation of high or low in the spatial position and vocal pitch are shared and treated in the common system,” says Suzuki. As was the case in prior studies, even in also vocalization with high/low pitch to visual stimulus with high/low position, perception and action are interacted bidirectionally, according to Suzuki.

Furthermore, Suzuki examined the situations where an object moves through a given space from low to high or correspondingly from high to low. It was easier to vocalize with high pitch in the former, and it was easier to vocalize with low pitch in the latter. As to the applicability of these findings, Nagai says, “Such findings can be applied to voice training for people with speech disorder or amusement and entertainment fields such as in Karaoke.”

Daiki Yamasaki, a postdoctoral researcher who studies auditory and visual perception of the three-dimensional space with Nagai, has also presented intriguing findings regarding how our visual experiment is influenced by sounds around the body.

One such study revealed that when we see an object while listening to a sound approaching toward the body, the object appears larger than it actually is. “What is interesting is that this phenomenon holds true only when the spatial location of the object is the same as that of the approaching sound,” explains Yamasaki. Considering that an approaching object in the environment could pose a threat to living things, our perception that adaptively integrates audiovisual stimuli may be a unique system with a defensive function.

Although our vision and audition influence each other, it is not difficult to imagine that vision has an overwhelming advantage over audition in grasping the space around us. Yamasaki, however, surprised the world by demonstrating that audition plays an important role in the spatial perception under visually restricted environment.

The researchers placed the participants in a darkened laboratory with one of their eyes covered by an eye-mask. When they observed an object in such a visually restricted condition, they could know the apparent size of the object, but not the distance to it. Under normal circumstances, we can visually estimate how large an object actually is by combining apparent size and distance. However, this was not possible in their experimental setup in which the object distance was unavailable. Then, to manipulate auditory distance in the experiment, the researchers used the binaural recording technique, a method in which microphones are placed in the participant’s ear canals. By recording sounds presented at various locations in advance, when these recorded sounds were played back to the participants, they could experience the realistic sounds and eventually perceive the distances to the sound sources. This method allowed the researchers to experimentally manipulate sounds in the three-dimensional space without placing loudspeakers. In the experiment, the researchers asked the participants to estimate the actual size of the visual stimulus paired with the sounds recorded at various distances, and showed that their size estimations based on auditory distance information were surprisingly accurate.

“This experiment has shown that audition, which has been thought to be less reliable than vision in the spatial domain, compensates for three-dimensional spatial perception under a visually ambiguous environment,” says Yamasaki. “People use their visual and auditory senses in a complementary manner to ensure a reliable perception of the three-dimensional space surrounding them. Moreover, our findings suggest that the brain may employ different audiovisual information processing strategies, depending on the relationship between the body and sensory stimuli in the given space,” he explains.

In addition, Nagai and his students are increasingly interested in how the integration of audiovisual information for spatial processing differs from person to person. “For example, autistic people are known for their unique audiovisual information processing. We are trying to show in a novel and unprecedented manner that the unique communication style of autistic people is closely related to their style of audiovisual integration.” There is much expectation of seeing the possible avenues that the research by Nagai and others will open up.

- Yusuke Suzuki (Photo Left)

- Doctor’s program student, Graduate School of Human Science; JSPS Doctoral Course Student (DC), Japan Society for the Promotion of Science

- Specialty: Experimental psychology

- Research Themes: Vocal processing; Crossmodal correspondence

- Masayoshi Nagai, Ph.D. (Photo Center)

- Professor, College of Comprehensive Psychology

- Specialty: Cognitive Psychology

- Research Themes: Embodiment, communication, and cognition; Perception of dynamic scenes

- Daiki Yamasaki, Ph.D. (Photo Right)

- Project Researcher, Research Organization of Open Innovation & Collaboration; JSPS Research Fellow, Japan Society for the Promotion of Science

- Specialty: Experimental Psychology

- Research Themes: Audiovisual interaction; Three-dimensional spatial perception