Evaluation

This module covers weeks 12-13 of the course.

Note that this material is subject to ongoing refinements and updates!

Evaluation

In IxD, everything “from low-tech prototypes to complete systems, from a particular screen function to the whole workflow, and from aesthetic design to safety features” can be evaluated (Sharp et al., 2019, p. 498). Evaluation can take place in a research lab, but also “in the wild”, and can be undertaken through direct engagement with users, but also through more passive, indirect methods.

Field studies "in the wild" can:

- Help identify opportunities for new technology

- Establish the requirements for a new design

- Facilitate the introduction of technology or inform deployment of existing technology in new contexts (Sharp et al., 2019, p. 504).

Why conduct evaluations?

Evaluations are usually conducted after designers have produced something (either a prototype or more concrete design) based on user requirements. These designs are then "evaluated to see whether the designers have interpreted the users’ requirements correctly and embodied them in their designs appropriately" (Sharp et al., 2019, p. 499).

Types of evaluation

Three broad types of evaluation:

- Controlled settings directly involving users

- Natural settings involving users

- Any (other) settings not directly involving users (Sharp et al., 2019, p. 500).

Some methods used in evaluation are the same as those used for discovering requirements, such as observation, interviews, and questionnaires. A common type of evaluation conducted in a controlled setting is usability testing, where the “primary goal is to determine whether an interface is usable by the intended user population to carry out the tasks for which it was designed” (Sharp et al., 2019, p. 501).

The aforementioned System Usability Scale (SUS) is still a common way of testing usability of a system. See the original version here:

Brooke, J. (1986). SUS - A quick and dirty usability scale.

https://digital.ahrq.gov/sites/default/files/docs/survey/systemusabilityscale%2528sus%2529_comp%255B1%255D.pdf

Ways of evaluation

Three ways to evaluate:

- Design guidelines, heuristics, principles, rules, laws, policies, etc.

- Remotely-collected data

- Predictive models

Design guidelines, policies,heuristics, principles, rules, laws, policies, etc.

Some examples:

- Revisit Nielsen’s heuristics and the SUS.

- Web accessibility guidelines, laws, and policies: W3C Web Accessibility Initiative (WAI)

- Note that several countries (including Japan) have laws for making certain content accessible online: Ministry of Education, Culture, Sports, Science and Technology (MEXT) web accessibility policy.

- Shneiderman classic 8 golden rules

Shneiderman's Eight Golden Rules of Interface Design are a seminal set principles of interface design:

- Strive for consistency

- Seek universal usability

- Offer informative feedback

- Design dialogs to yield closure

- Prevent errors

- Permit easy reversal of actions

- Keep users in control

- Reduce short-term memory load (2016).

A cognitive walk-through to create a Spotify playlist:

Dalrymple, B. (2018). Cognitive Walkthroughs. https://medium.com/user-research/cognitive-walkthroughs-b84c4f0a14d4

Web analytics

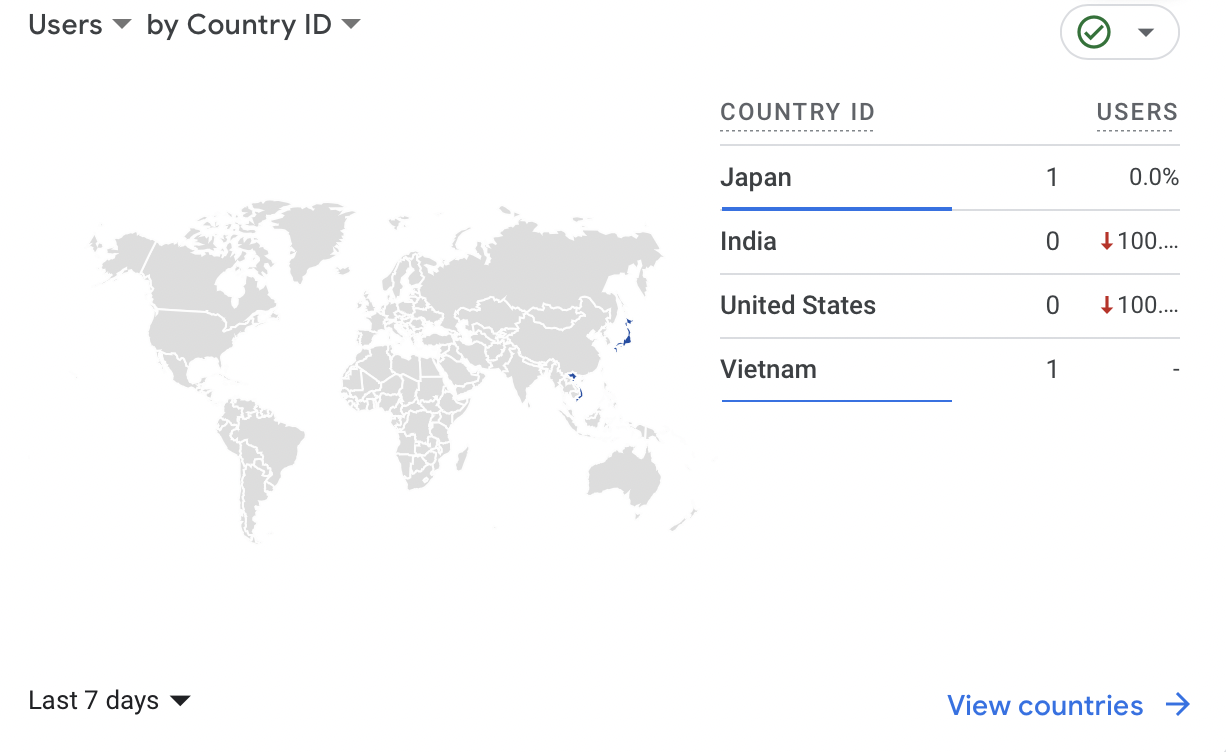

Google analytics a common platform for web analytics:

https://marketingplatform.google.com/about/analytics/

Figure: Example of functionality from Google Analytics, showing visitors to a page according to country

A/B testing

A/B testing is a "way to evaluate a website, part of a website, an application, or an app running on a mobile device... by carrying out a large-scale experiment to evaluate how two groups of users perform using two different designs—one of which acts as the control and the other as the experimental condition, that is, the new design being tested" (Sharp et al., 2019, p. 574).

Figure: A basic visual explanation of A/B testing. A is an original design, while B is a variation of it. Users are split into two groups, one who tests the original and one who tests the variation.

A list of AB tests towards the bottom of this page: https://business.adobe.com/blog/basics/learn-about-a-b-testing

Predictive modelling, such as Fitt’s Law

We have covered Fitt’s Law previously, but it has some interesting applications to evaluation, such as:

- “evaluating systems where the time to locate an object physically is critical to the task at hand...

- [examining] the effect of the size of the physical gap between displays and the proximity of targets in multiple-display environments...

- [evaluating] the efficacy of simulating users with motor impairments interacting with a head-controlled mouse pointer system” (Sharp et al., 2019, pp. 576-577).

In simpler terms, we can say that big objects that are near are easier to access, while those that are small and further away are harder to access:

© Interaction Design Foundation, CC BY-SA 4.0

Back to top | © Paul Haimes at Ritsumeikan University | View template on Github