STORY #3

Toward Robots

We Can Live Together

Tadahiro Taniguchi, Ph.D.

Professor, College of Information Science and Engineering

Symbol Emergence in Robotics and

Integrated Cognitive Architecture

Artificial intelligence (AI) is commonly used in everyday life. If you have a smart speaker, you may ask them to play music of your choice. When writing a letter in a foreign language, one may turn to an automatic translation tool. “I admit they are useful, but they are not intended to model the total human cognitive system,” says Tadahiro Taniguchi, Professor at the College of Information Science and Engineering, Ritsumeikan University.

As one of ten fellows at the Ritsumeikan Advanced Research Academy (RARA), launched in AY2022 to support leading researchers, Taniguchi is responsible for expanding the university’s research network and advancing global and innovative research as a node that connects researchers with one another and with research. To fulfill this mission, Taniguchi plans to focus his efforts on three major research themes.

The first is the Creation of an Integrated Cognitive Architecture for Real-World Artificial Intelligence for Next Generation Symbiotic Societies. Most current AI research focuses on AI as a tool to replace certain aspects of human intelligence, as in the examples provided at the beginning of this article. However, human intelligence is more comprehensive and multimodal, with complex cognitive activities performed by integrating multiple types of sensory information into a single body. “Even a simple task in our daily life is performed using visual, auditory, and haptic information simultaneously,” Taniguchi explains. “To reproduce human intelligence in a single robot body, we must consider a process of cognitive development that comprehensively combines multimodal sensory information and have them support one another. We call this framework, which enables machine learning to support cognitive development, an integrated cognitive architecture.”

In 2020 and 2021, the Japanese Cabinet Office established Moonshot Goals for Achieving Well-Being and called for researchers to participate in themed studies. Taniguchi and his team are participating in Goal 1, the “Realization of a society in which human beings can be free from limitations of body, brain, space, and time by 2050,” and Goal 3, the “Realization of AI robots that autonomously learn, adapt to their environment, evolve in intelligence and act alongside human beings, by 2050.”

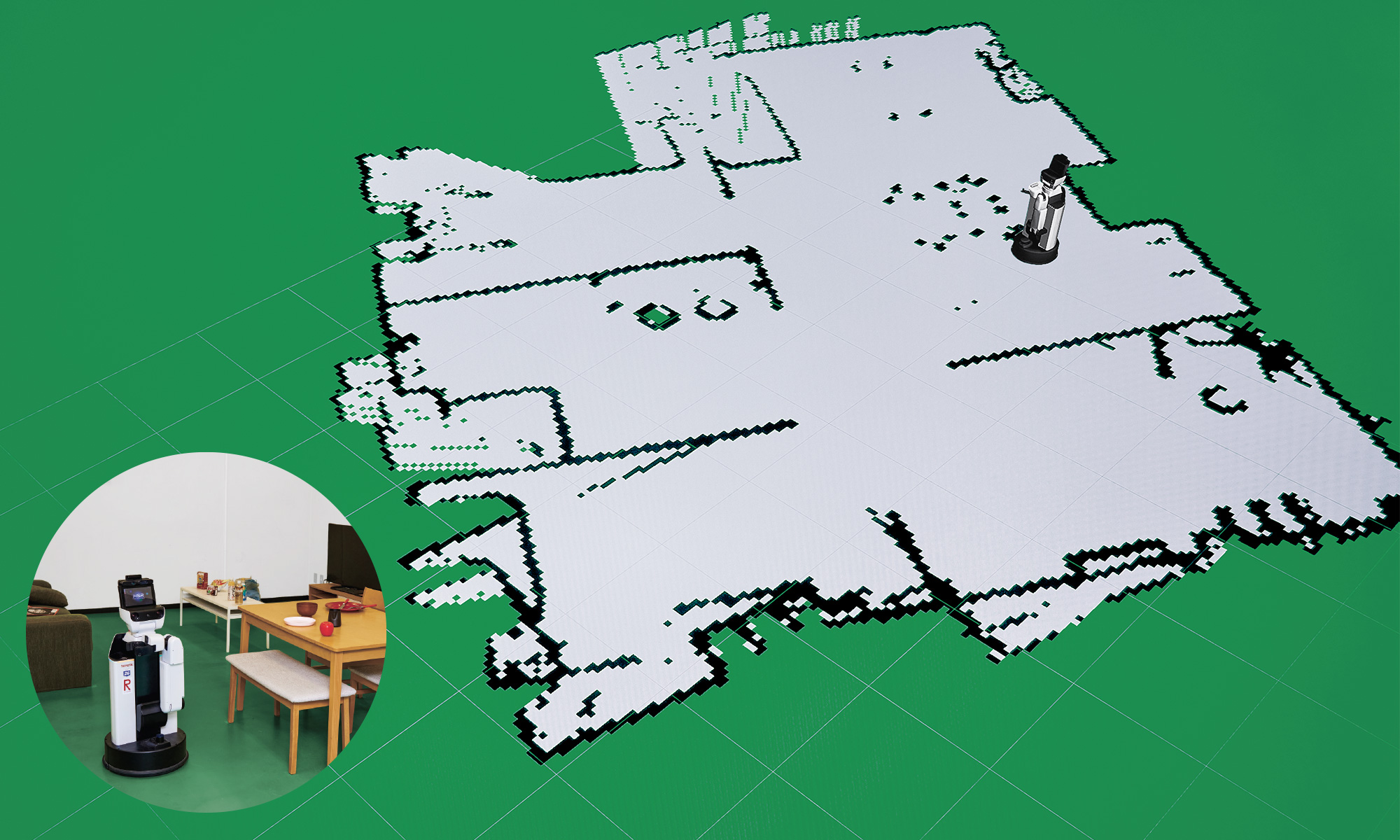

“For Goal 1, we are engaging in a project to create an avatar symbiotic society where everyone can play an active role without restrictions, led by Professor Hiroshi Ishiguro of Osaka University, which focuses on a teleoperated robot called Cybernetic Avatar (CA). Imagine a teleoperated service robot that is entirely manipulated by yourself. You cannot leave the robot alone while in action. It would be much easier if you could just ask it to do the work and leave the rest. For the robot to determine where to go and what to do to meet your request, it must either be pre-input with knowledge about the environment in which it is required to work or given the autonomy and ability to acquire knowledge about the location through interaction with the environment and/or people. The latter is our approach. We are willing to make a robot semi-autonomous and become able to communicate with us linguistically in a real-world environment.”

For Goal 3, Taniguchi’s team participated in the Co-evolution of Human and AI-Robots to Expand Science Frontiers project, managed by Associate Professor Kanako Harada of the University of Tokyo. This project aims to develop robots that can perform medical surgeries. Unlike robots working in factories, which approach uniformly shaped and positioned objects on the same trajectory and repeat the same actions, surgical robots must work on living bodies in which the shape and position of organs vary from patient to patient. They seek to create a robot that can work flexibly and acquire knowledge through soft interactions such as observing and poking the patient’s organs. This process can be described as an integrated cognitive activity using multiple types of sensory information. This approach is also called the world model-based approach.

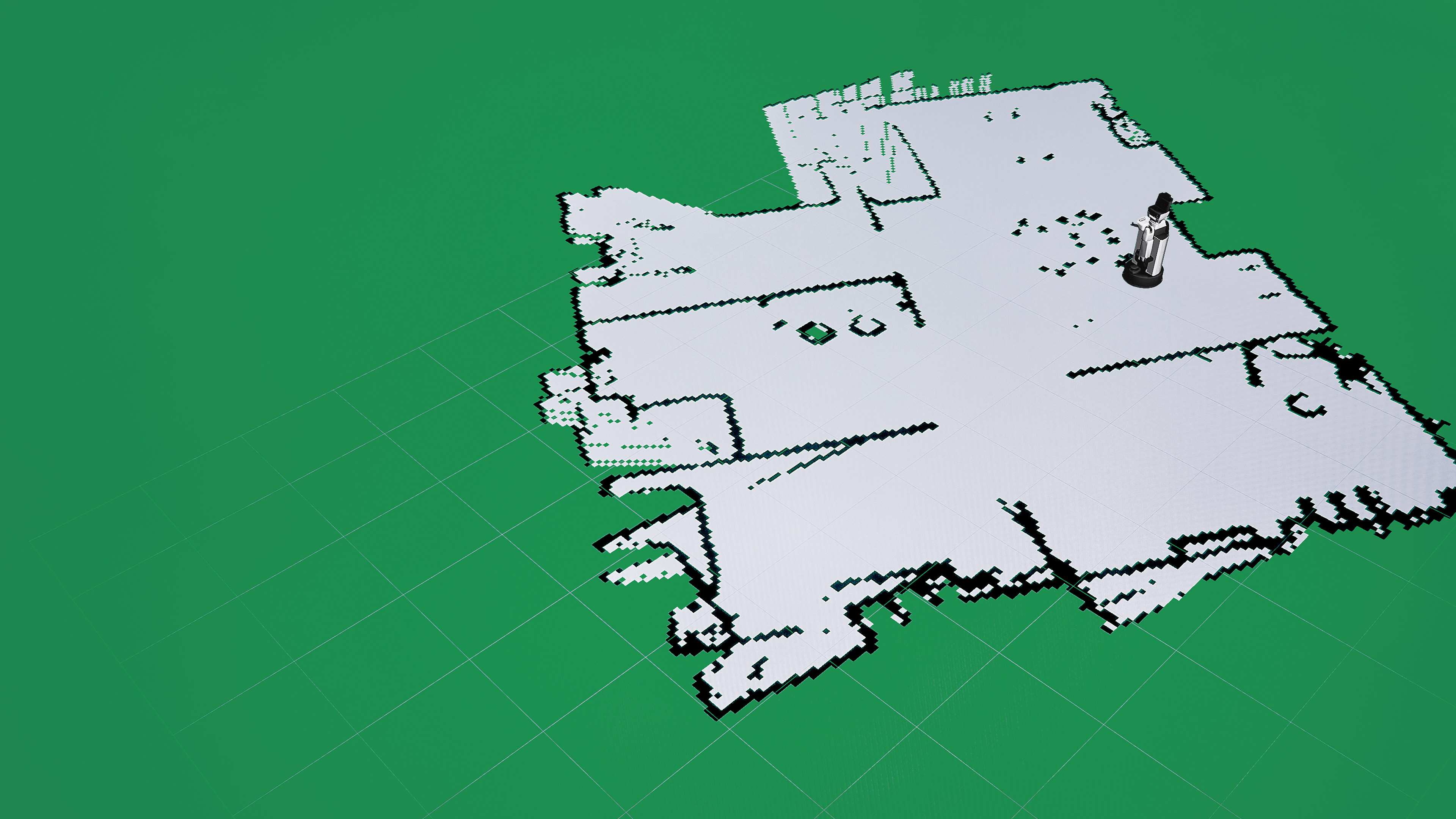

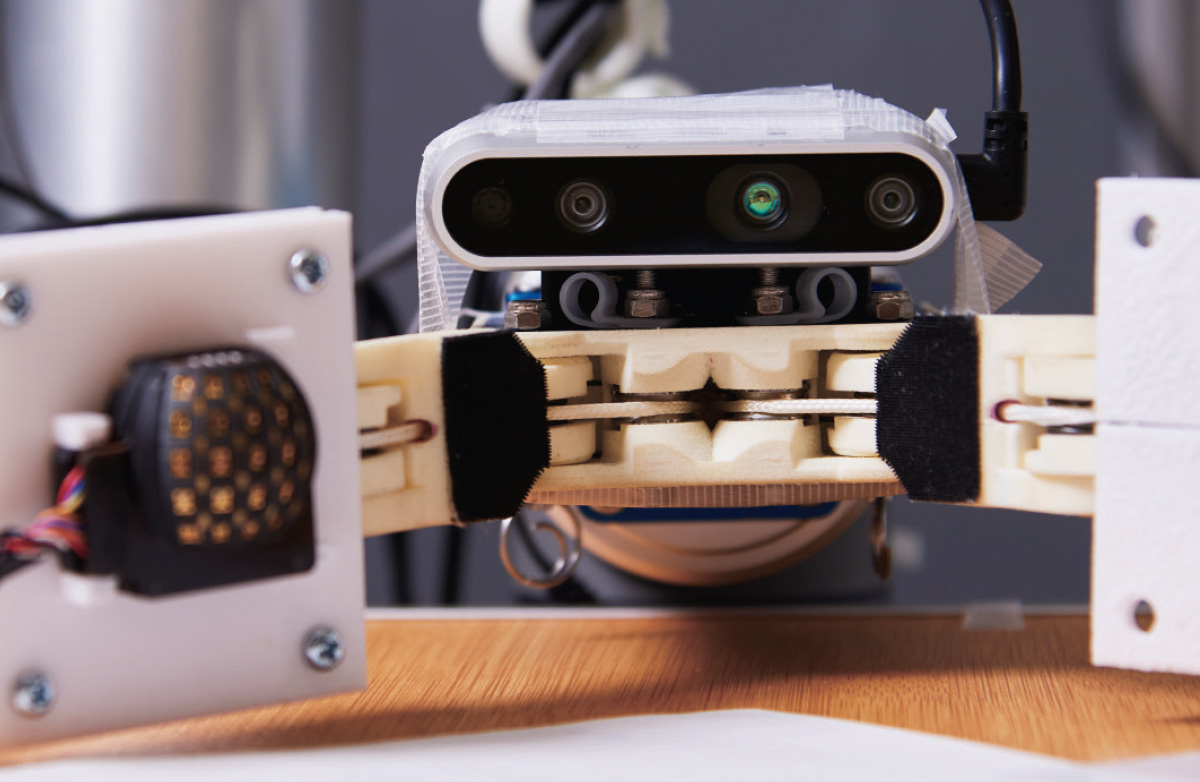

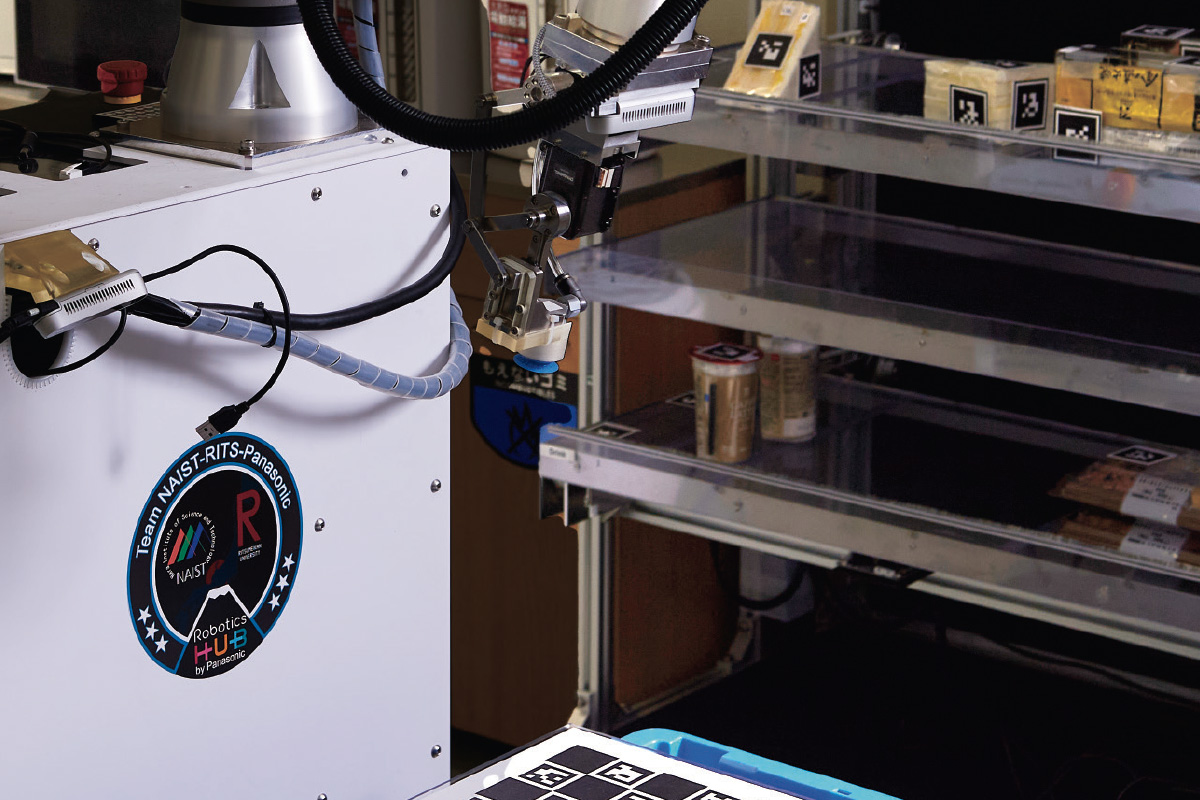

Scientists operate on rats to implant objects in their skulls for certain experiments in the field of scientific discovery. In such complex manipulation tasks, we humans recognize the present condition of the target system by perceiving the object’s appearance through our eyes, its contact forces through touch, and the sound it makes through our ears. These observations are not based on objective measurements but rather a perception constructed based on our five senses. The photograph shows a robot performing an eggshell dissection task, which is a simulated operation. It learns the tasks through a model known as the world model approach to construct a state-space representation through multimodal information extraction.

The second theme is Machine Learning Technology for Co-creative Learning between Humans and Robots Based on Symbol Emergence Systems. Taniguchi has been advocating his original concept of symbol emergence systems for about 15 years, which means that the meanings of words are determined bottom-up by agents’ interactions in the community to which they belong. For example, when we say “apple,” we believe the word “apple” and the fruit “apple” are bound to each other in a one-to-one relationship; however, we are free to create the meaning of words as we interact with others and/or a surrounding environment as a semiotic and linguistic fact. “Apple” can be the name of a color, but in other languages, the similar fruit may not be called “apple.”

Taniguchi explains that in machine learning to date, human language has been treated as a teacher, and what the teacher defines is always correct. “We input a set of objects and their names to a robot,” he says. “However, humans do not learn things that way.” Taniguchi points out that in parent-child relationships, parents imagine their children’s intentions through their undeveloped language; in research conducted under interdisciplinary collaboration, researchers belonging to different fields of study sometimes have difficulty unifying terminology because the word meanings tend to be different. Language evolves in the process of defining the world to get along well with others; in that sense, it can be said to be a product of adaptation among human beings.

Service robots need to assist us in our living environments. Service robots must understand the environment they operate in if they are to assist us. To do so, the robots begin by generating a map based on sensor information. This environmental awareness includes perceiving the locations of all the objects in a place as well as the name of the place. The service robots are also expected to become capable of acquiring this perception autonomously without human intervention.

According to Taniguchi, language is based on physical experience as well. If we provide a robot with multimodal sensory information, such as holding various objects to sense their softness, observing them to acquire visual information, or shaking them to hear their sound while providing a model that can properly organize the information, the robot spontaneously starts classifying the objects. If we repeatedly talk to the robot while giving it information, saying that these are “soft” objects, the robot identifies “soft” as a word that relates to the tactile sensation.

Taniguchi recalls that his research motivation comes from the fact that looking directly into the brains of others to obtain feedback on what he says is conveyed to them is impossible. “Even though it is hardly possible to obtain any feedback, we can believe that our intentions are understood by our counterpart,” he says. “How can it be possible?” After working on this question for approximately 20 years, he developed the idea of a symbol emergence system.

Until recently, the goal of robotics has been to enable robots to adapt to the physical world. Robots have been working in factories for decades, but factory interiors are arranged by humans to make work easier for robots. In other words, humans have eliminated the uncertainty in the physical environment to help robots, and the development of robots capable of working in a nonuniform, physically uncertain environment has long been coveted.

However, environmental uncertainty is not limited to the physical world but is also found in the semiotic world. In a linguistically uniform world, people might use pre-specified words and sentences to convey pre-specified meanings. For example, the AI in smart speakers is often implicitly based on the assumption of a homogeneous linguistic world. It is still very difficult to develop a robot that can cooperate with humans in the physical world through semiotic (e.g. linguistic) communication to accomplish concrete tasks. With the idea of a symbol emergence system, Taniguchi aims to develop the dynamics of cognition that recognizes uncertainties in both the physical and linguistic worlds and combines them appropriately.

The third theme is the Creation of an Interdisciplinary Field of Symbol Emergence in Systems Science. Taniguchi’s research is no longer confined to the category of robotics but has broadened its scope to include philosophy, developmental psychology, cognitive science, linguistics, and semiotics (an interdisciplinary field that bloomed in the 20th century). Taniguchi suggests that if he combines AI and robotics methodologies with those of the humanities or philosophy, he may be able to rearrange his previous points of view and start a new discussion. Research has advanced through the evolution of tools. Just as the development of new technologies (such as DNA sequencers) contributed to major developments in biotechnology in the latter part of the 20th century, philosophical research has actively used AI and robotics in recent years to express and test human languages or minds. With new research tools next to the paper and pencil, new ways of understanding the human mind that has never existed before are expected to emerge in the near future.

Taniguchi inferred that his willingness to understand humans and his aspirations to create robots that can live with humans are two sides of the same coin. “It is my fundamental desire to understand humans, but I also have a desire to reproduce in robotics the ability to adapt to the environment and acquire knowledge that is similar to that of humans. Since humans are currently the only beings capable of working in concert with humans at the semiotic and linguistic levels, I have no choice but to learn from humans.”

While we live in an era when artificial intelligence (AI) can perform amazing things through image recognition, machine translation, and voice recognition, it is still difficult for AI to provide flexible assistance, including performing physical tasks in domestic and commercial environments. Various efforts are being made to achieve this objective. At the World Robot Summit 2020, Taniguchi’s team won the 1st prize in a contest staged at a convenience store.

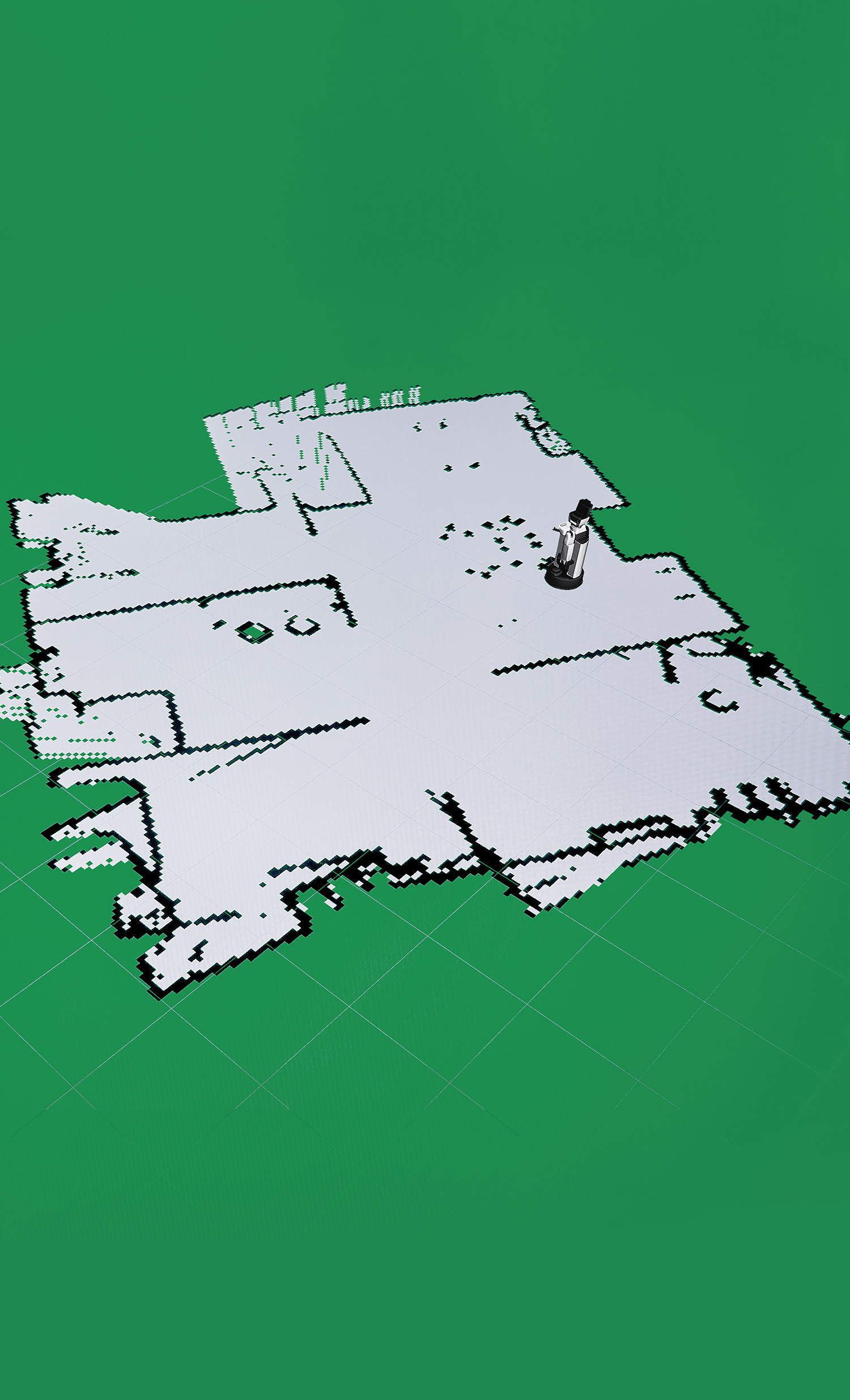

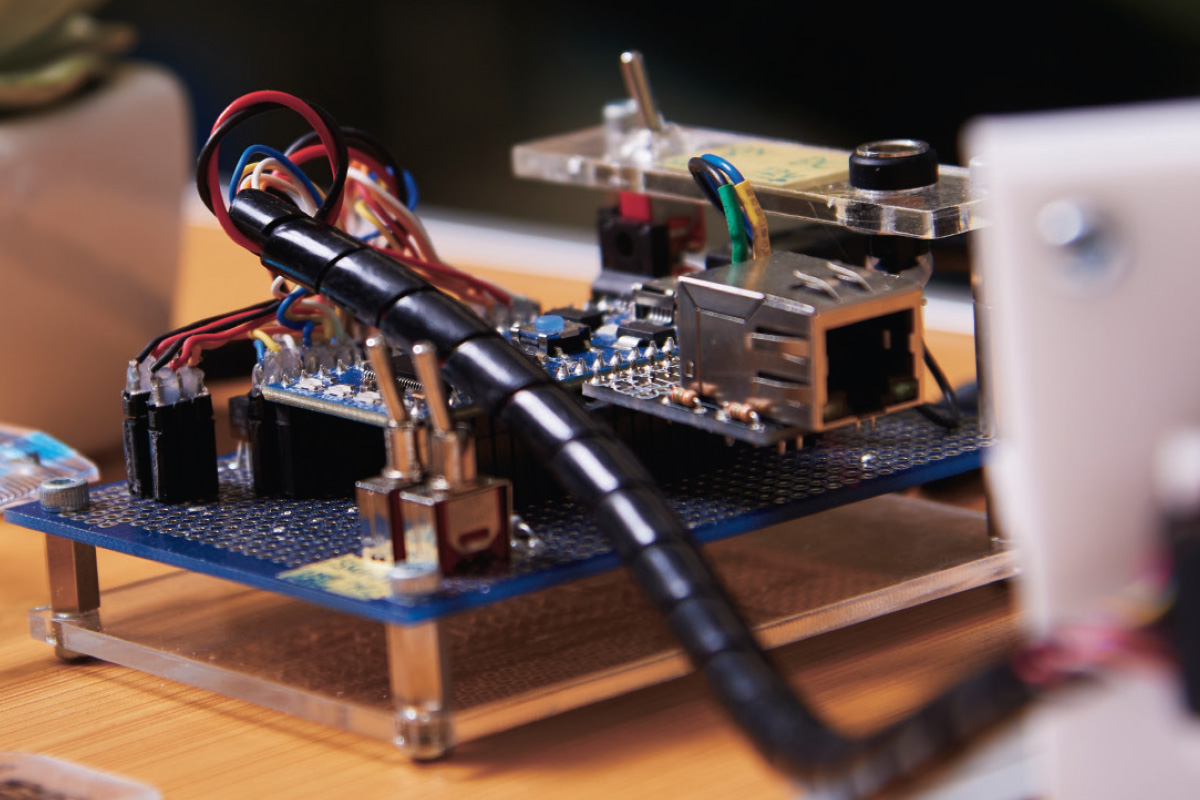

As the photograph shows, a robot does not necessarily need to have a body resembling that of a human. As various sensor-motor systems—such as proximity sensors that can measure the distance from a hand to an object and vacuum grippers to draw objects closer—are being considered, research and development of intelligence to support service robots are underway.

At the end of his interview, Taniguchi addresses the children’s dream of AIs in SF animations popular in Japan, such as Doraemon and Yui in “Sword Art Online” and Tachikoma in “Ghost in the Shell.” Ironically, he says that the dream might have been losing its purity as AI evolved. “Since around 2010, the industrial application of AI has been expanding, AI has become much more accessible to human life, and the meaning of AI changed,” he explains. “At the same time, however, there is a growing shortage of AI talent, and the financial benefit has become one of the reasons why teenagers pursue their career in AI research.” Although Taniguchi personally hopes that children will continue to have the pure dream of having an AI or a robot friend next to them, he admits that AI as a tool to replace some intellectual activity is the mainstream today, and not many researchers aim to create artificial intelligence as an individual. Taniguchi concludes by reiterating that artificial intelligence as an individual is possible and that he would like to present what the problems are and how to resolve them to realize it.

- Tadahiro Taniguchi, Ph.D.

- Professor, College of Information Science and Engineering

- Specialties: Artificial intelligence, cognitive robotics, developmental robotics, machine learning, intelligent robotics, pattern recognition, cognitive science, human communication

- Research Theme: Symbol emergence in robotics