STORY #10

Artificial Intelligence Acquiring Vocabulary and Concepts in Ways Similar to a Child

Tadahiro Taniguchi

Professor, College of Information Science and Engineering

Yoshinobu Hagiwara

Assistant professor, College of Information Science and Engineering

Towards next-generation AI with Symbol Emergence in Robotics

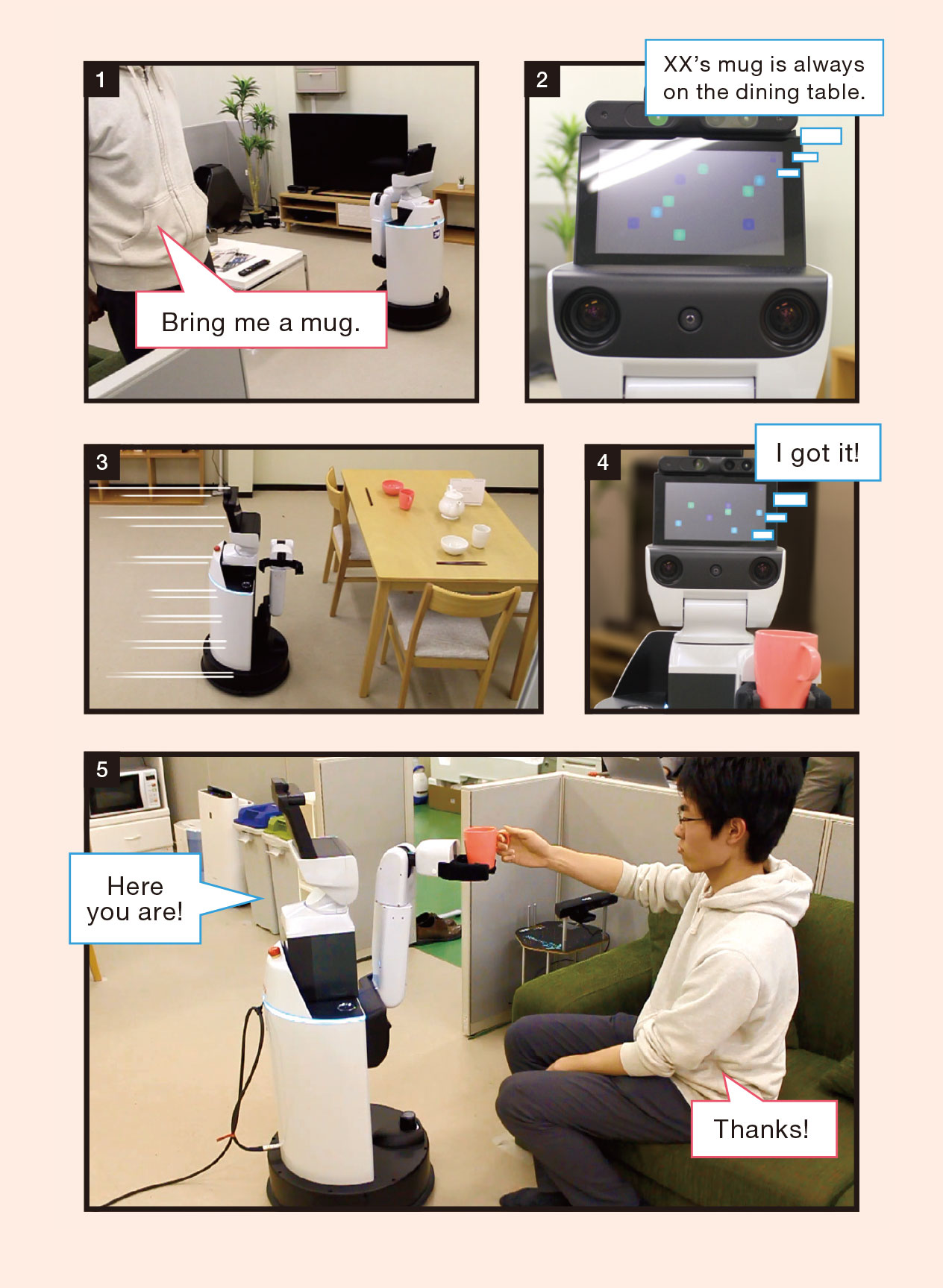

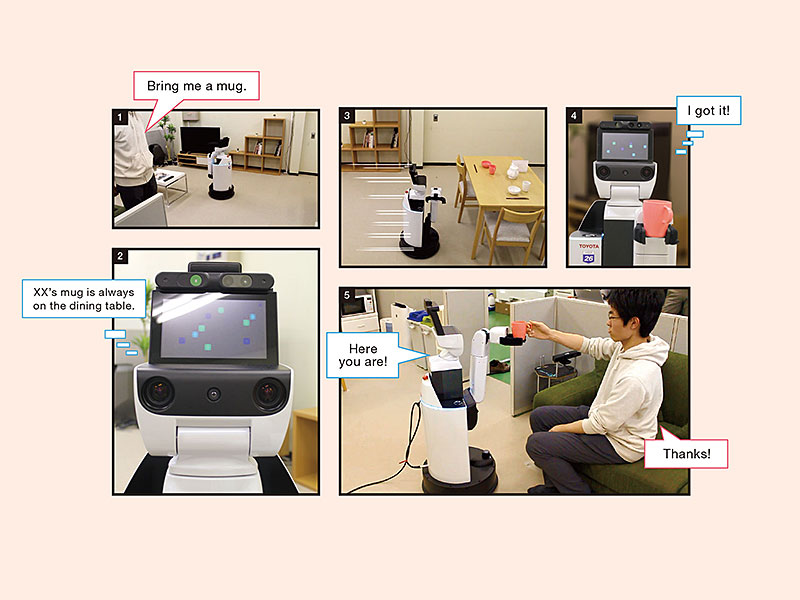

“Bring me a glass.” If asked by someone in your family at home, most people will go directly to the kitchen, open the cupboard, and, if it is for their mother, will perhaps choose the glass she prefers and bring it to her. Without any great detailed explanation, human beings can do all this simply from just one phrase. Could robots do the same?

Tadahiro Taniguchi is trying to achieve this with an approach known as “Symbol Emergence in Robotics,” which is different from other common ideas. Alongside Yoshinobu Hagiwara, he is currently working on the development of a family-focused robot that can independently extend knowledge via communication with human beings.

“Each family has its own local rules and language. Taking a kitchen as an example, its location, the things in it, and how they are called differs from family to family,” Hagiwara says. “We are in the process of creating a family-focused robot for individual families that can learn local rules and language from a bottom-up approach and that can support family life, as required by the situation of each family.”

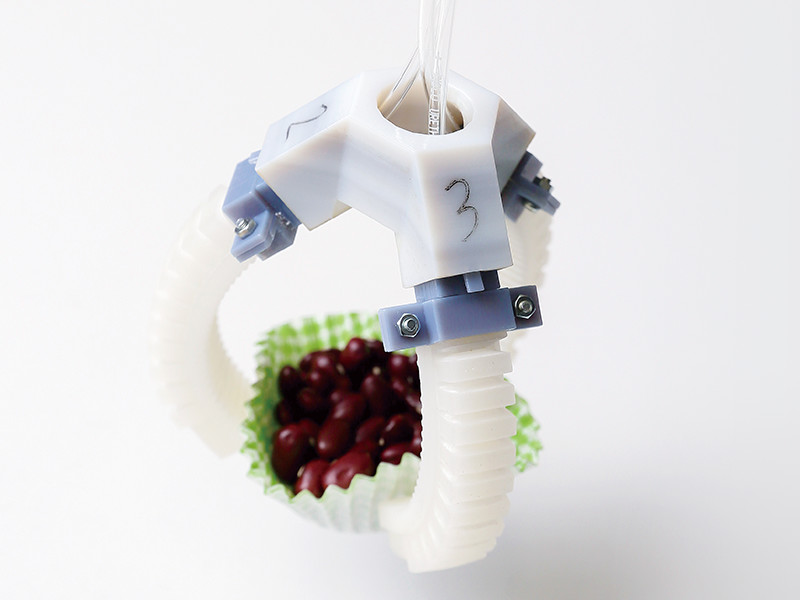

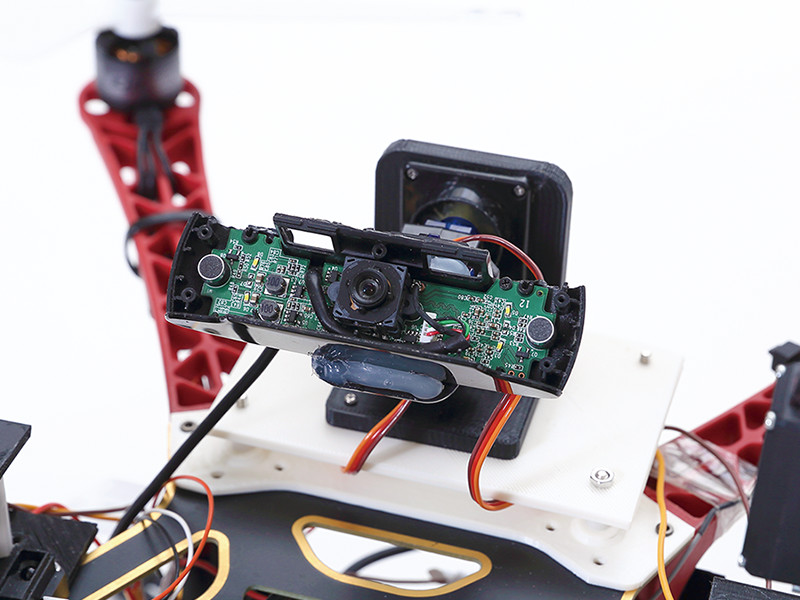

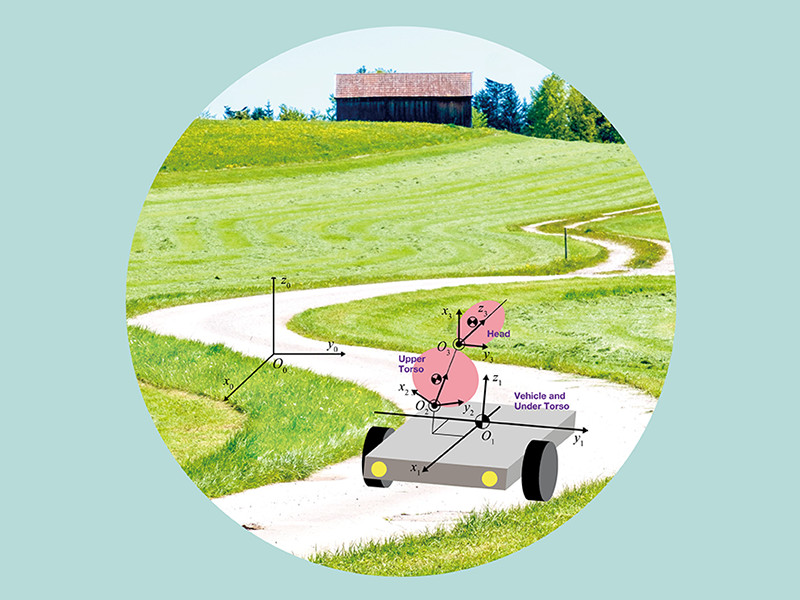

What will be developed is a robot that can perceive the external environment in a multi-modal manner with multiple sensing devices mounted to it, and based on information from these sensors, it can learn locations pertaining to retrieving or placing regular objects. Firstly, as sensory organs to connect with human beings and the external environment, a camera to obtain visual information, a microphone for speech recognition, and distance sensors for estimating certain locations are mounted on the robot. Then, generation process for the acquired object images, speech sounds uttered by people, and the position information of the robot itself are modeled. Finally, a method is established in which the robot assumes the parameters of the locations via Gibbs sampling, etc., and by repeating the process, the robot can acquire location concepts.

Hagiwara and his team also conduct demonstration tests while actually using robots. In an experimental family space, such as in a living room, dining room, and kitchen combined into one, containing a refrigerator, a cabinet, an oven rack, bookshelves, TV, table, sofa, and other pieces of furniture, they have a robot learn a set of vocabularies indicating 11 locations. These include “in front of the table” or “in front of the dining room,” in addition to observed image information of objects, such as a glass, while moving. By repeating this process, the robot becomes able to predict correct locations from the available vocabulary at a probability of more than 70%, and can actually carry objects. “For example, for common items such as a glass or a table, the robot can set a yellow glass on the table in front of the dining room and a white glass on a large-sized table. Based on the location concept acquired through interaction and repetitions with the external environment at each family location, the robot has become able to carry each glass to a suitable location based on each family’s local rules.”

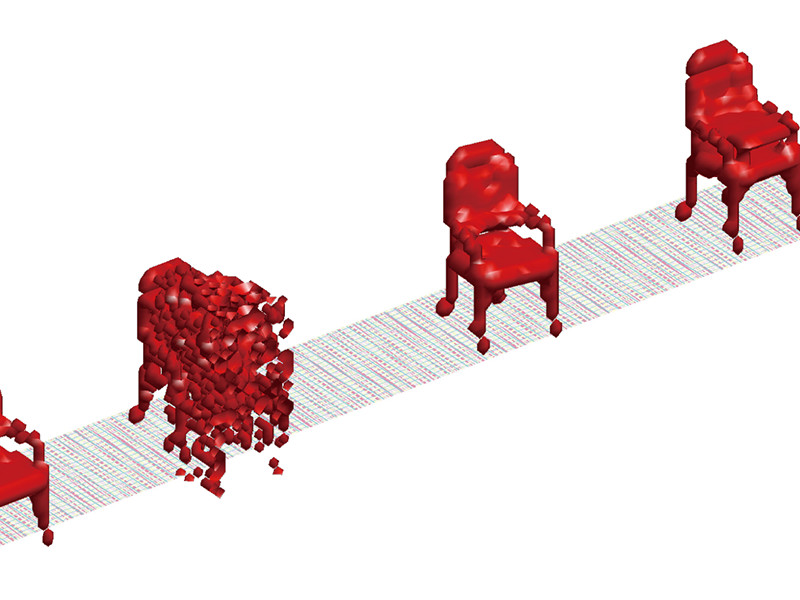

Graphical model of location concept learning Graphical model of location concept learning

Estimating parameters based on position, objects, and language information

“The current era of AI, which is known as the third AI boom, depends on the processing capabilities of computers that can handle vast amounts of data, in addition to deep learning and other machine learning,” Taniguchi explains. Regardless of how much data is collected, it will not lead to the acquisition of the above-mentioned local knowledge. In response to this, Taniguchi says, “We are aiming at an AI that can form a concept from a bottom-up approach by integrating multi-modal perceptional information acquired from communication and interactions with human beings and its environment. We want to create a next-generation AI that can acquire intelligence under a process as if a child understands the language and the environment step-by-step.” Symbol Emergence Systems are the philosophical basis for this, and Taniguchi has been addressing such systems for more than a decade.

“After a person is born, we experience language or symbols via people around us and we understand the meaning of concepts and language, local context including cultures and customs, and ambiguous expressions by communicating with others and interacting with our environments,” Taniguchi says. “The key point of Symbol Emergence Systems is to focus on such dynamic processes of concepts and of language acquisition.” By having a design drawing of intelligence that supports human Symbol Emergence Systems or computational principles, it will be possible to create AI that is completely different from existing forms.

What is essential for achieving this goal is a physical body that can accumulate a multitude of experience by contacting with the external world. This involves not only building a program as a computational expression of intelligence but also creating a robot that has sensors and actuators, and interfaced to the real world. The true value of Symbol Emergence in Robotics can be found right here.

“It may sound paradoxical, but sooner or later, we may be able to improve on our understanding of human activity via robots,” Taniguchi says. Through a study of Symbol Emergence Systems, he is eager to approach the essence of human activity from this perspective.

- Tadahiro Taniguchi[left]

- Professor, College of Information Science and Engineering

- Subjects of research: Constructive understanding of emergent systems involving human beings and technological applications

- Research keywords: Statistical science, cognitive science, human interface and interaction, intelligent informatics, soft computing, intelligent robotics, Kansei informatics, intelligent mechanics/mechanical systems

- Yoshinobu Hagiwara[right]

- Assistant professor, College of Information Science and Engineering

- Subjects of research: Location conceptual learning based on multi-modal information and applications for life support robots

- Research keywords: Human interface and interaction, intelligent informatics, intelligent robotics, measurement engineering