STORY #4

Mechanical Eyes Providing Innovation for Flying Robots

Kazuhiro Shimonomura

Associate professor, College of Science and Engineering

Fully utilizing visual sensors in the development of flying robots for aerial tasks

It was a few years ago that autonomous flying intelligent helicopters or drones first emerged on the scene. Ever since, these flying robots based on multi-rotor helicopters have become very popular. Applications have extended from aerial photography to the transportation of supplies or items, with recent developments seeing a shift to flying robots for important tasks, such as at civil engineering or construction-related sites. Research involving contacting and manipulating objects while flying by mounting robotic arms and hands onto a chassis has already been reported on, but there are still many challenges in terms of commercialization. Kazuhiro Shimonomura is tackling these challenges. Shimonomura is focusing on robot vision or the faculty of sight, while also tackling the development of an aerial manipulation robot that can complete tasks while flying.

Many of the existing flying robots that do work assume that the lower part of the body is a work space so as to stably maintain the attitude of the chassis. On the contrary, Shimonomura is trying to create a robot with robotic hands on the upper part of the chassis and to extend them upward to carry out work or tasks. “By realizing such a robot, we can greatly expand the area of work done by flying robots and can also perform tasks at or investigate tunnels, indoor ceilings, or the rear-sides of bridges,” he says, while explaining the great potential.

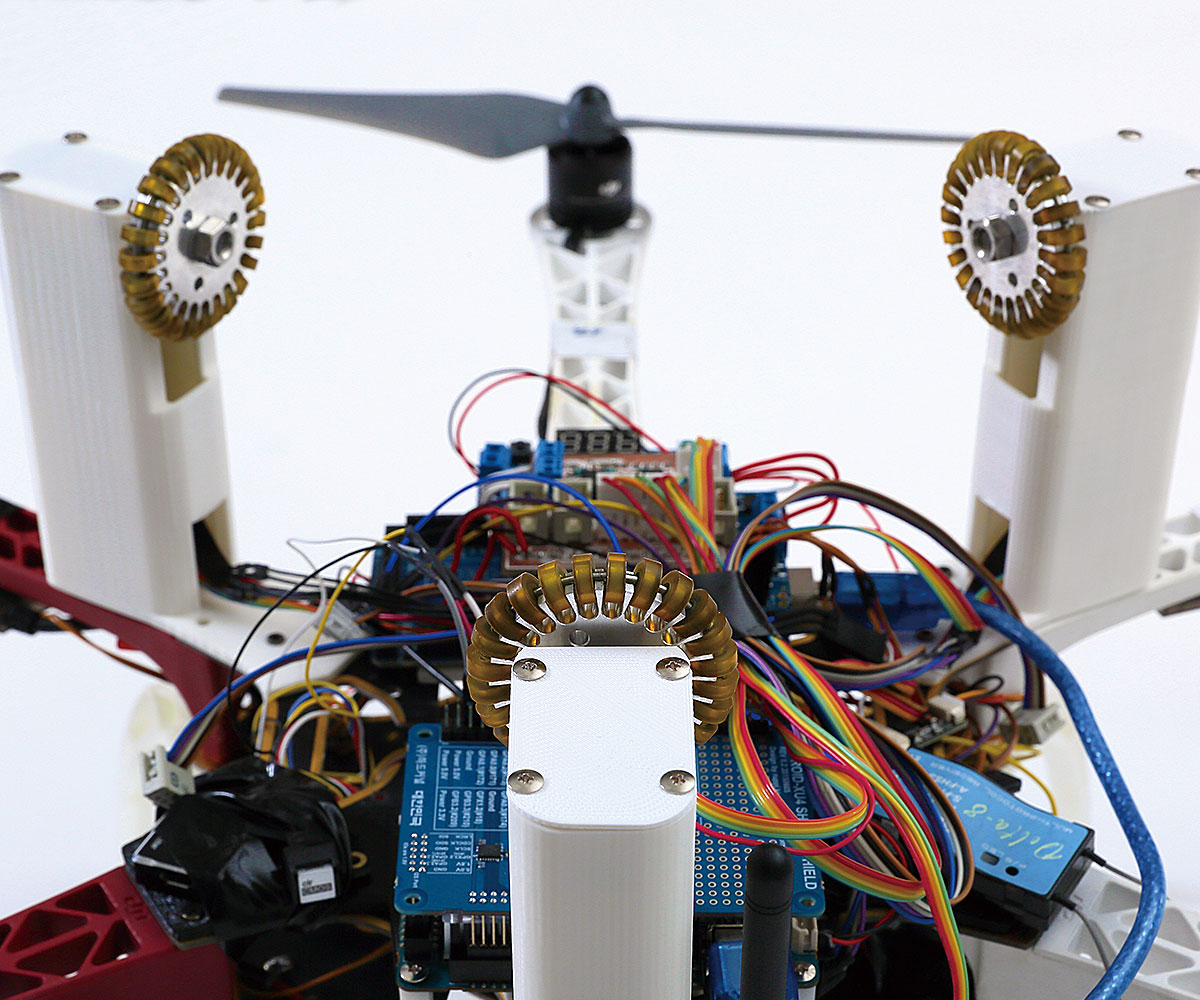

In developing a flying robot, restrictions on weight and power consumption are very severe, and this makes development very difficult. To conduct work while flying, cameras and other sensing devices, computer and control equipment controlling robotic hands and flying robots by processing acquired data, and a battery to enable work for a certain period of time are needed. However, if all of these are mounted, weight limits will be exceeded. The question is how high the performance can go with a compact and lightweight robot. That is where Shimonomura’s ideas come in.

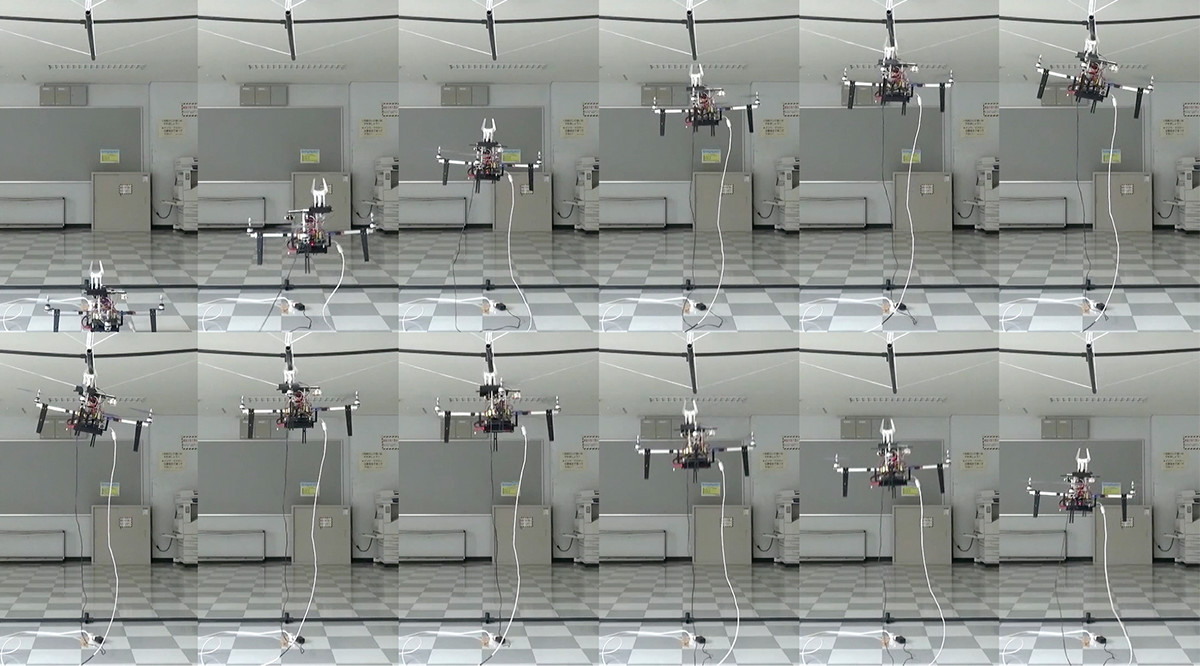

“We first built a vision system to detect objects in a state where a robot is flying and unstably shaking, and we controlled the positions of the robotic hands and integrated the processes up to moving the fingers into proper positions. We are proceeding with technological developments in terms of both hardware and software by producing devices, using a rewritable FPGA, which is an embedded processor,” according to Shimonomura. In fact, he has demonstrated that by mounting a robotic hand at the top of a flying robot, it can hold a stick-like object in the sky or turn and remove a bulb from a socket.

Flying robot holding a stick-like object and conducting various tasks

Process of a robot holding a pipe on a ceiling

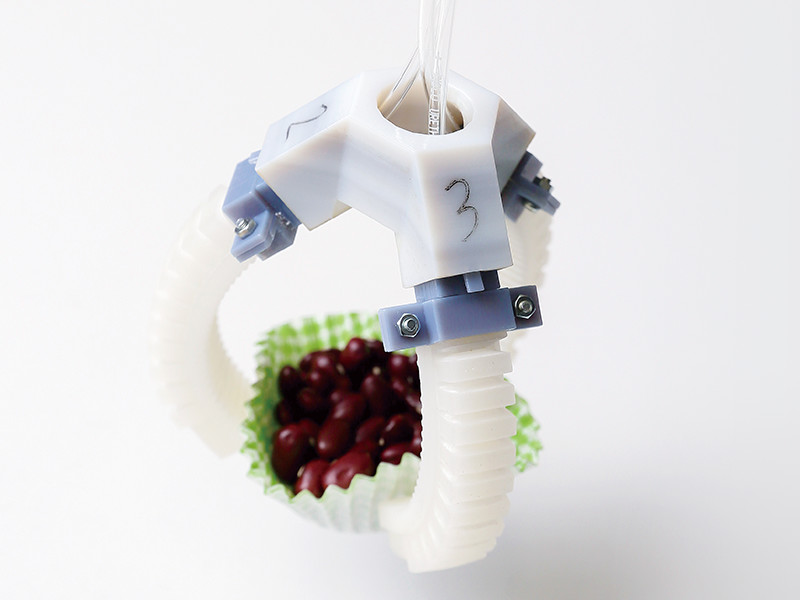

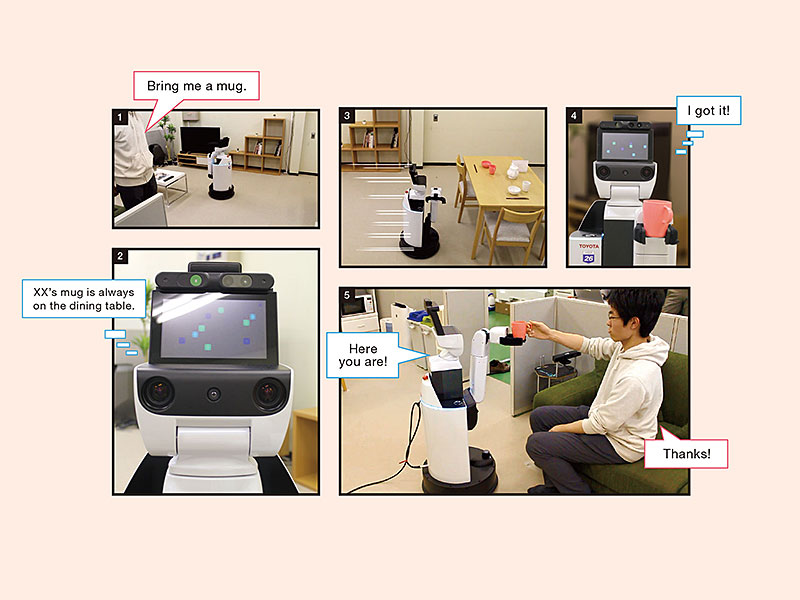

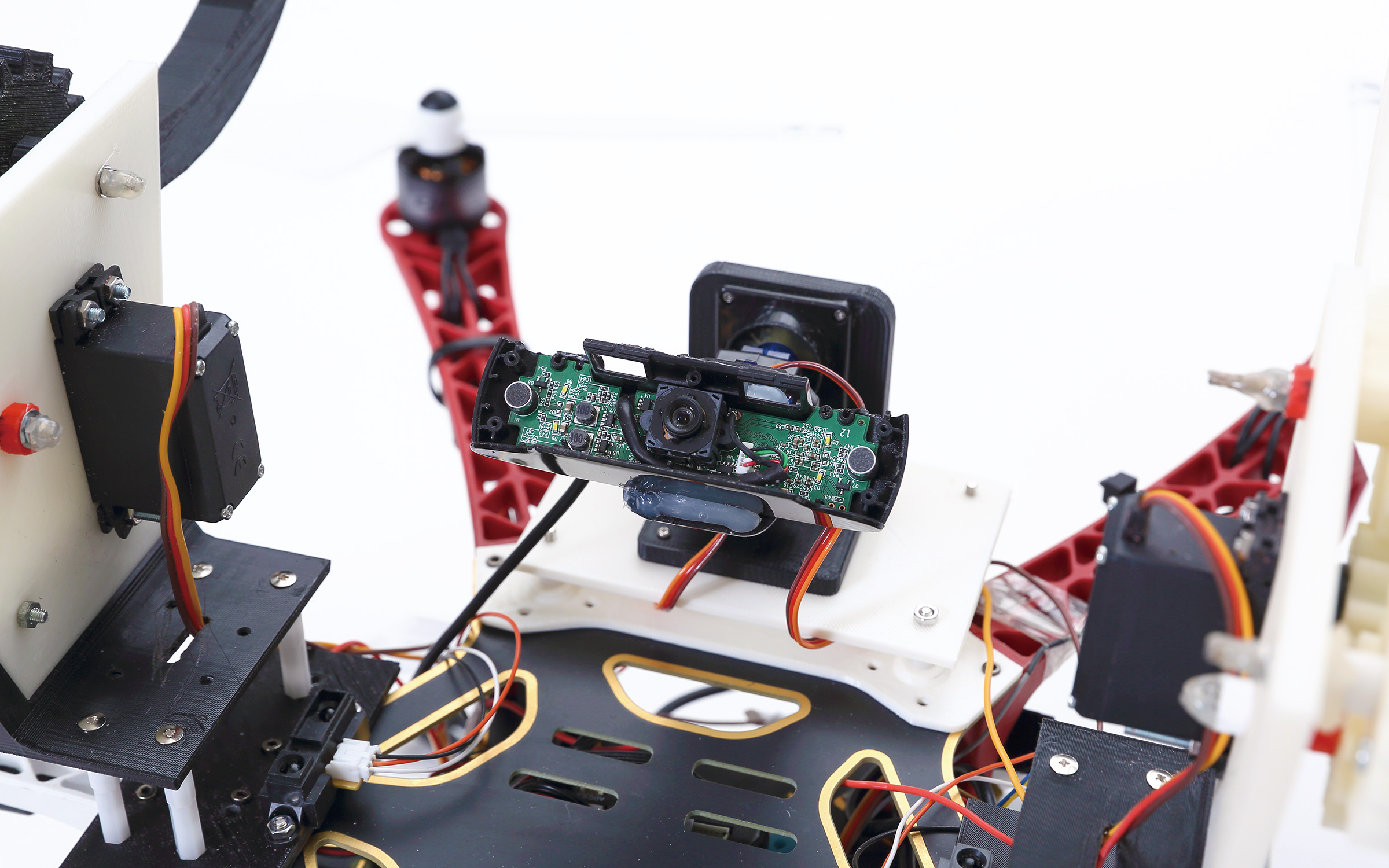

One of the essential sensors for a robotic hand to hold an object is a contact (tactile) sensor. Shimonomura is addressing groundbreaking research to grasp this tactile sensitivity using a visual sensor. The process for a robotic hand to hold an object is comprised of exploring the object, approaching it, and holding it. Exploration requires a camera and other visual sensors, approach distance and proximity sensors, and holding tactile and pressure sensors, while data synchronization among sensors is also required. However, as described above, it is difficult to mount all of these items onto a flying robot with so many restrictions. Therefore, Shimonomura has developed a combined tactile & proximity optical sensor employing a compound-eye camera. This compound-eye camera simultaneously performs contact and proximity sensing to obtain high-resolution contact information so that robotic hand hold control is realized.

“Cameras and other visual sensors feature many measurement points. It can be said there is no other tactile sensor for which performance is so good,” Shimonomura declares. Traditionally, the amount of calculations for image processing was so vast that it posed issues with calculation speeds and computer capacity, but Shimonomura has solved these issues by building an optimal control algorithm and utilizing built-in FPGA. During demonstration tests, it was confirmed that a robotic hand using only a combined sensor could hold objects of different materials such as rubber, wood, or metal. In the near future, he will enable it to hold more-softer substances such as food.

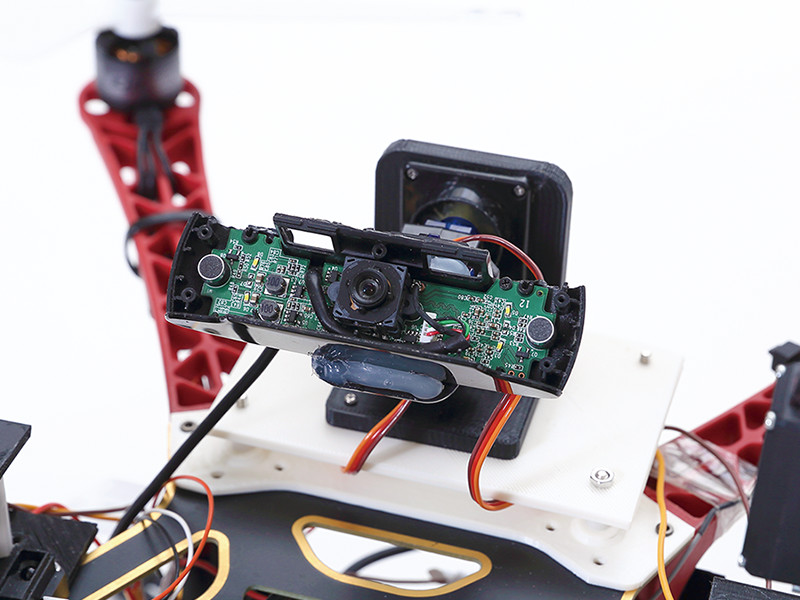

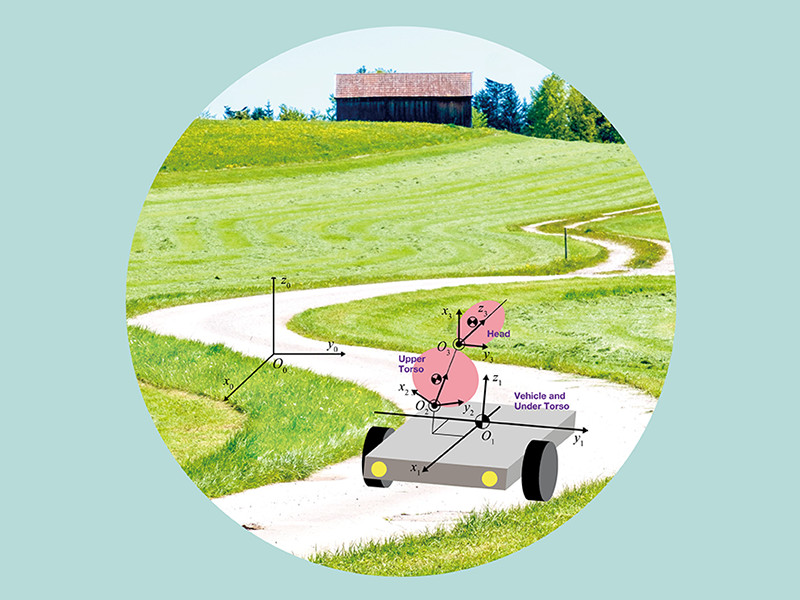

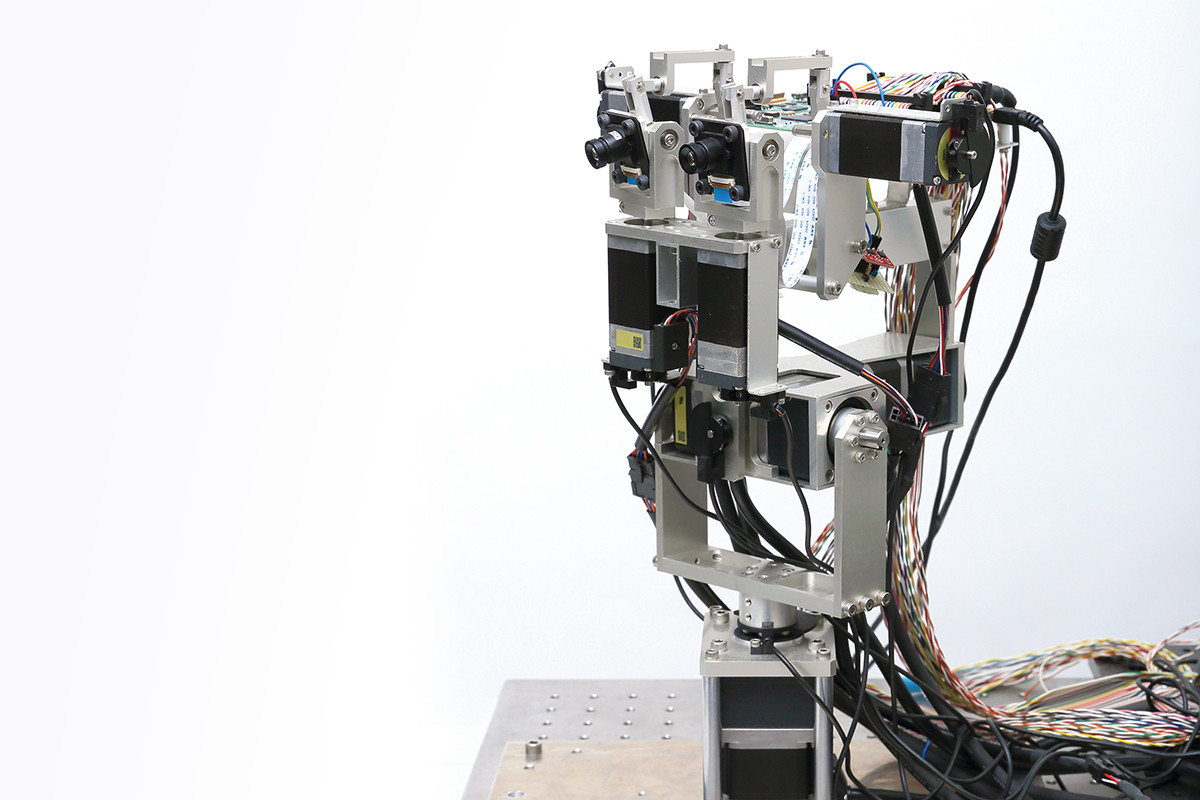

To acquire visual technology with higher performance, Shimonomura is also working on the study of robotic heads. “Human beings can clearly grasp objects even while walking when their heads are shaking up and down. I want to control the stabilization in a robot’s line of sight by imitating human neck and eye movements,” Shimonomura says. He realized a motion control to always keep the target by turning both eyes (cameras) and the head using image information from two cameras serving as eyes and a head angle and speed information measured by an inertial sensor (gyro sensor). All of these technologies will be applied to cameras mounted on a flying robot.

“My ultimate target is full automation in a flying robot so as to enable the conduct of various tasks,” Shimonomura says, as he shows a real sense of enthusiasm. “How closer can I get? It is such a challenging-but-great theme.”

Flying robot that enables high-precision positioning by moving, while pushing wheels to the ceiling

Robot head

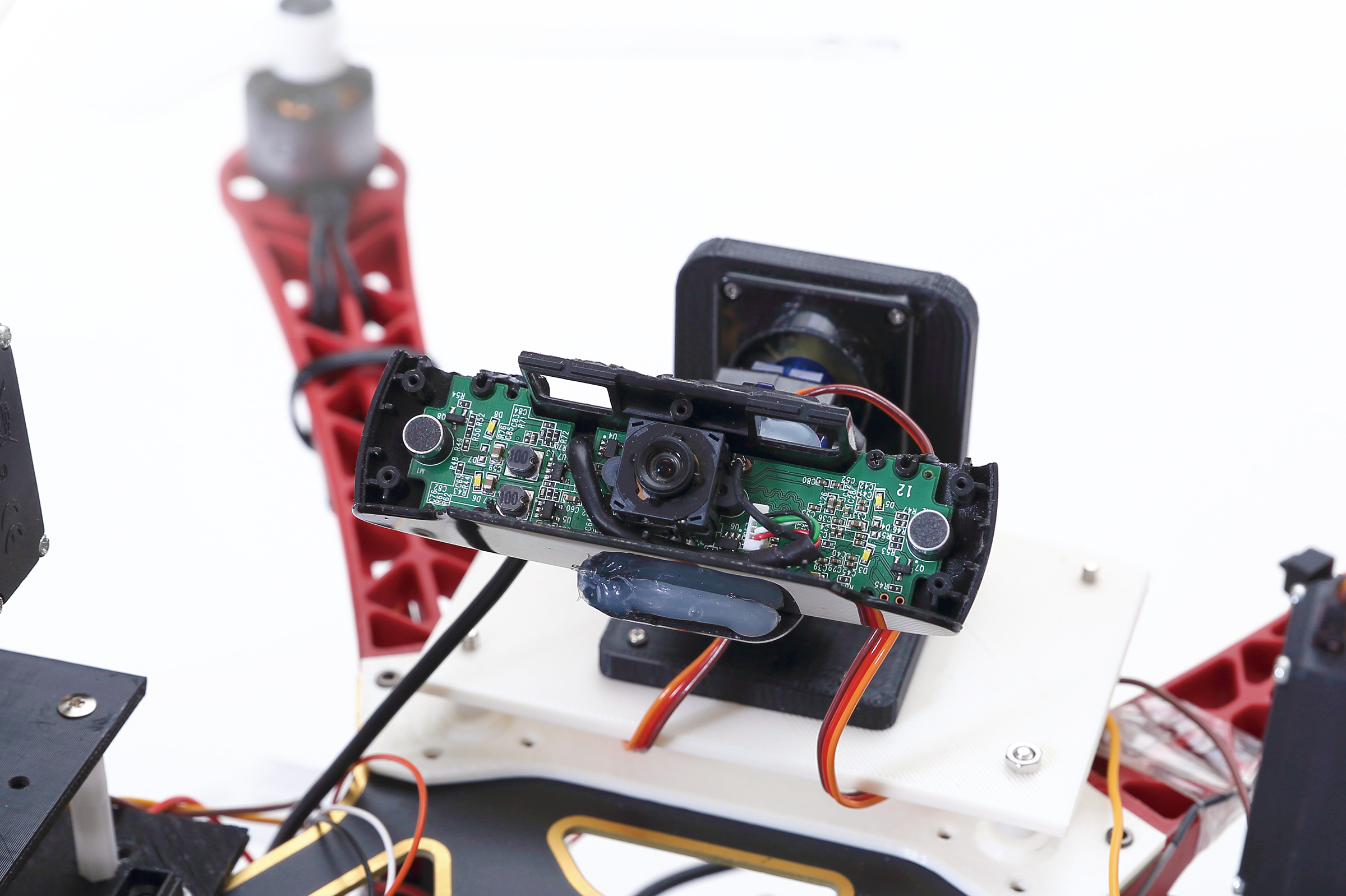

Visual sensor with pan/tilt mechanism mounted on a flying robot

- Kazuhiro Shimonomura

- Associate professor, College of Science and Engineering

- Subjects of research: Vision sensors and systems for intelligent robotic systems

- Research keywords: Perceptual information processing, intelligent robotics, electron devices/electronic equipment